Following on from my recent post on Power BI and Excel 2016 news, here are some more details about the new BI-related features in the Excel 2016 Preview. Remember that more BI-related features may appear before the release of Excel 2016, and that with Office 365 click-to-run significant new features can appear in between releases, so this is not a definitive list of what Excel 2016 will be able to do at RTM but a snapshot of functionality available as of March 2015 as outlined in this document and which I’ve found from my own investigations. When I find out more, or when new functionality appears, I’ll either update this post or write a new one.

Power Query

Yesterday, in the original version of my post, I mistakenly said that Power Query was a native add-in in Excel 2016: that’s not true, it’s not an add-in at all, it’s native Excel functionality. Indeed you can see that there is no separate Power Query tab any more, and instead there is a Power Query section on the Data tab instead:

![DataTab DataTab]()

Obviously I’m a massive fan of Power Query so I’m biased, but I think this is a great move because it makes all the great Power Query functionality a lot easier to discover. There’s nothing to enable – it’s there by default – although I am a bit worried that users will be confused by having the older Data tab features next to their Power Query equivalents.

There are no new features for Power Query here compared to the latest version for Excel 2013, but that’s what I expected.

Excel Forecasting Functions

I don’t pretend to know anything about forecasting, but I had a brief play with the new Forecast.ETS function and got some reasonable results out of it as seen in the screenshot below:

![image image]()

Slicer Multiselect

There’s a new hammer icon on a slicer, which, when you click it, changes the way selection works. The default behaviour is the same as Excel 2013: every time you click on an item, that item is selected and any previous selection is lost (unless you were holding control or shift to multiselect). However with the hammer icon selected each new click adds the item to the previously selected items. This is meant to make slicers easier to use with a touch-screen.

![Slicer Slicer]()

Time Grouping in PivotTables

Quite a neat feature this, I think. If you have a table in the Excel Data Model that has a column of type date in it, you can add extra calculated columns to that table from within a PivotTable to group by things like Year and Month. For example, here’s a PivotTable I built on a table that contains just dates:

![Group1 Group1]()

Right-clicking on the field containing the dates and clicking Group brings up the following dialog:

![Group2 Group2]()

Choosing Years, Quarters and Months creates three extra fields in the PivotTable:

![Group3 Group3]()

And these fields are implemented as calculated columns in the original table in the Excel Data Model, with DAX definitions as seen here:

![Group4 Group4]()

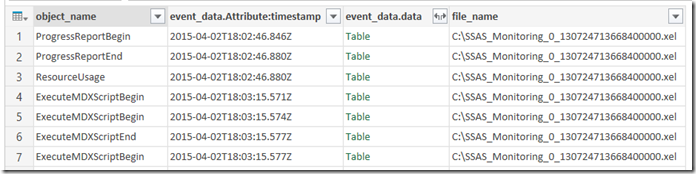

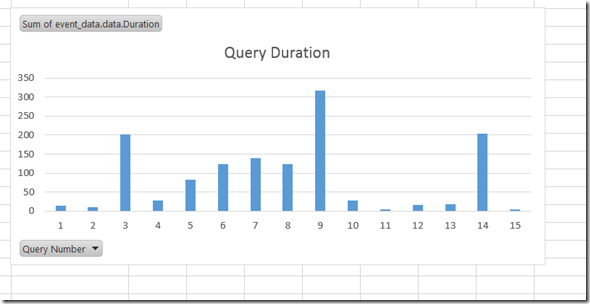

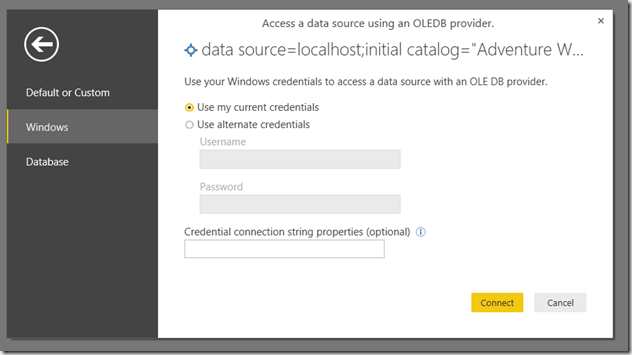

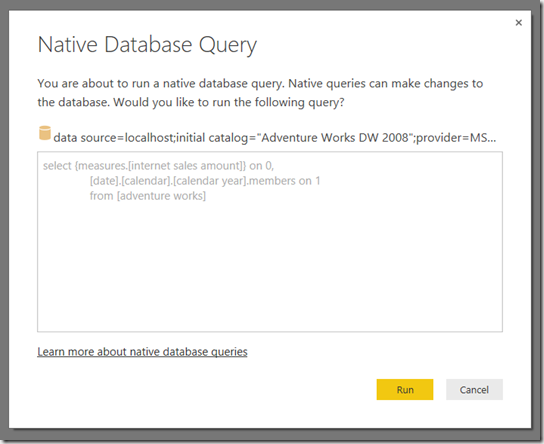

Power View on SSAS Multidimensional

At-bloody-last. I haven’t installed SSAS on the VM I’m using for testing Excel 2016, but I assume it just works. Nothing new in Power View yet, by the way.

Power Map data cards

Not sure why this is listed as new in Excel 2016 when it seems to be the same feature that appeared in Excel 2013 Power Map recently:

https://support.office.com/en-za/article/Customize-a-data-card-in-Power-Map-797ab684-82e0-4705-a97f-407e4a576c6e

Power Pivot

There isn’t any obvious new functionality in the Power Pivot window, but it’s clear that the UI in general and the DAX formula editor experience in particular has been improved.

![image image]()

Suggested Relationships

When you use fields from two Excel Data Model tables that have no relationship between them in a PivotTable, you get a prompt to either create new relationships yourself or let Excel detect the relationships:

![image image]()

Renaming Tables and Fields in the Power Pivot window

In Excel 2013 when you renamed tables or fields in the Excel Data Model, any PivotTables that used those objects had them deleted. Now, in Excel 2016, the PivotTable retains the reference to table or field and just displays the new name. What’s even better is that when you create a measure or a calculated column that refers to a table or column, the DAX definition of the measure or calculated column gets updated after a rename too.

DAX

There are lots of new DAX functions in this build. With the help of the mdschema_functions schema rowset and Power Query I was able to compare the list of DAX functions available in 2016 with those in 2013 and create the following list of new DAX functions and descriptions:

FUNCTION NAME DESCRIPTION

DATEDIFF Returns the number of units (unit specified in Interval)

between the input two dates

CONCATENATEX Evaluates expression for each row on the table, then

return the concatenation of those values in a single string

result, separated by the specified delimiter

KEYWORDMATCH Returns TRUE if there is a match between the

MatchExpression and Text.

ADDMISSINGITEMS Add the rows with empty measure values back.

CALENDAR Returns a table with one column of all dates between

StartDate and EndDate

CALENDARAUTO Returns a table with one column of dates

calculated from the model automatically

CROSSFILTER Specifies cross filtering direction to be used in

the evaluation of a DAX expression. The relationship is

defined by naming, as arguments, the two columns that

serve as endpoints

CURRENTGROUP Access to the (sub)table representing current

group in GroupBy function. Can be used only inside GroupBy

function.

GROUPBY Creates a summary the input table grouped by the

specified columns

IGNORE Tags a measure expression specified in the call to

SUMMARIZECOLUMNS function to be ignored when

determining the non-blank rows.

ISONORAFTER The IsOnOrAfter function is a boolean function that

emulates the behavior of Start At clause and returns

true for a row that meets all the conditions mentioned as

parameters in this function.

NATURALINNERJOIN Joins the Left table with right table using the

Inner Join semantics

NATURALLEFTOUTERJOIN Joins the Left table with right table

using the Left Outer Join semantics

ROLLUPADDISSUBTOTAL Identifies a subset of columns specified

in the call to SUMMARIZECOLUMNS function that should be

used to calculate groups of subtotals

ROLLUPISSUBTOTAL Pairs up the rollup groups with the column

added by ROLLUPADDISSUBTOTAL

SELECTCOLUMNS Returns a table with selected columns from the table

and new columns specified by the DAX expressions

SUBSTITUTEWITHINDEX Returns a table which represents the semijoin of two

tables supplied and for which the common set of

columns are replaced by a 0-based index column.

The index is based on the rows of the second table

sorted by specified order expressions.

SUMMARIZECOLUMNS Create a summary table for the requested

totals over set of groups.

GEOMEAN Returns geometric mean of given column

reference.

GEOMEANX Returns geometric mean of an expression

values in a table.

MEDIANX Returns the 50th percentile of an expression

values in a table.

PERCENTILE.EXC Returns the k-th (exclusive) percentile of

values in a column.

PERCENTILE.INC Returns the k-th (inclusive) percentile of

values in a column.

PERCENTILEX.EXC Returns the k-th (exclusive) percentile of an

expression values in a table.

PERCENTILEX.INC Returns the k-th (inclusive) percentile of an

expression values in a table.

PRODUCT Returns the product of given column reference.

PRODUCTX Returns the product of an expression

values in a table.

XIRR Returns the internal rate of return for a schedule of

cash flows that is not necessarily periodic

XNPV Returns the net present value for a schedule of cash flows

Plenty of material for future blog posts there, I think – there are lots of functions here that will be very useful. I bet Marco and Alberto are excited…

VBA

It looks like we have support for the Excel Data Model (aka Power Pivot) in VBA at last.

![VBAModel VBAModel]()

I need to do some research here, but I get the distinct feeling that the only things that are possible through VBA are the things you can do in the Excel ribbon, such as creating connections, tables and relationships. I can’t see any support for creating measures, calculated columns or hierarchies…? I can’t see anything relating to Power Query either. Maybe I’m not looking in the right place; maybe something will come in a later build?

UPDATE: I’m an idiot – there is one minor change to the VBA support for the Excel Data Model, but actually almost everything that I see in 2016 is also present in 2013. Sorry…

![]()

![]()