Back in September I wrote a post on handling situations where the data source for your Power Query query was unavailable. The great thing about that technique is that instead of seeing an error message when you refresh your query, instead you get an empty table with the same structure – which means that the Excel Data Model doesn’t get messed up, you don’t lose relationships and all your PivotTables remain intact. However it means you no longer see the error message (unless you return it in the table somehow, which isn’t ideal), which makes it hard to debug. What you really want is to get the empty table AND the error message somehow. But surely a Power Query query can only return a single value as an output?

Actually no. Every value in M can have a metadata record associated with it, and we can use this here to our advantage (I’ve been wondering whether there was a use for metadata ever since I read about it in the Language spec… and at long last I’ve found one!). Here’s a simple example of a query that shows how to associate a metadata record with a value:

let

Source = "Some Random Value" meta [message = "Hello World", somenumber = 123],

Output = Source

in

Output

The output of this query is just the text “Some Random Value”:

At this point we’re setting the metadata but not doing anything with it. The following query uses the Value.Metadata() function to get the metadata record:

let

Source = "Some Random Value" meta [message = "Hello World", somenumber = 123],

Output = Value.Metadata(Source)

in

Output

The output is now a record value:

Basically, this means that in scenarios where we want to handle a data source error we can always return a table and at the same time return any error messages in the metadata.

Here’s a similar scenario to the one I showed in my original blog post, but where the query does a select distinct on the EnglishDayNameOfWeek column in the DimDate table in the Adventure Works DW database:

let

//Connect to SQL Server

Source = Sql.Database("localhost", "adventure works dw"),

//Get DimDate table

dbo_DimDate = Source{[Schema="dbo",Item="DimDate"]}[Data],

//Remove all other columns except EnglishDayNameOfWeek

#"Removed Other Columns" = Table.SelectColumns(dbo_DimDate,{"EnglishDayNameOfWeek"}),

//Get distinct values from this column

#"Removed Duplicates" = Table.Distinct(#"Removed Other Columns"),

//Output in case of error

AlternativeOutput=#table(type table [EnglishDayNameOfWeek=text], {}),

//Does the Removed Duplicates set error?

TestForError= try #"Removed Duplicates",

Output = if TestForError[HasError]

then

//In case of error return empty table

//and attach error message in metadata

(AlternativeOutput meta [message = TestForError[Error]])

else

//If there's no error

//just return the table plus a message

(#"Removed Duplicates" meta [message = "Query executed successfully"])

in

Output

The output when the query (called DimDate) executes successfully, shown in an Excel table, is just a list of day names:

You can now create a second query in the same workbook with the following definition to get the metadata record associated with the output of this query:

let

Source = Value.Metadata(DimDate),

message = Source[message]

in

message

The output of this query, when the first query is successful, is:

However, if you rename the EnglishDayNameOfWeek column in SQL Server, the first query now returns an empty table:

The second query now returns the error message when it’s refreshed:

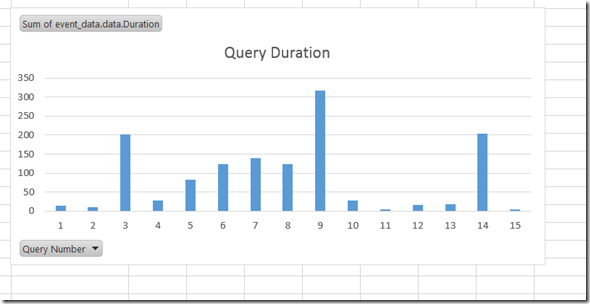

One thing to be careful of, bearing in mind what we learned about Power Query and M in my last post, is that the output of the first query is not cached after it has been executed so executing the second query to get the error message will involve at least a partial re-execution of the first query. However in this particular case if you look in Profiler you can see Power Query checking to see whether the EnglishDayNameOfWeek column exists in the DimDate table before it tries to do the select distinct SQL query, and this is enough to know whether the query will fail or not – so whether there is an error or not, running the second query to get the message does not result in the (potentially expensive) select distinct SQL query being executed.

You can download the sample workbook for this post here.

![clip_image001[6] clip_image001[6]](http://cwebbbi.files.wordpress.com/2014/11/clip_image0016_thumb.jpg?w=613&h=393)