The other day, while I was reading this post by Melissa Coates, I was reminded of the existence of extended events in SSAS. I say ‘reminded’ because although this is a subject I’ve blogged about before, I have never done anything serious with extended events because you can get the same data from Profiler much more easily, so I had pretty much forgotten about them. But… while Profiler is good, it’s a long way from perfect and there’s a lot of information that you can get from a trace that is still very hard to analyse. I started thinking: what if there was a tool we could use to analyse the data captured by extended events easily? [Lightbulb moment] Of course, Power Query!

I’m not going to go over how to use Extended Events in SSAS because the following blog posts do a great job already:

http://byobi.com/blog/2013/06/extended-events-for-analysis-services/

http://markvsql.com/2014/02/introduction-to-analysis-services-extended-events/

https://francescodechirico.wordpress.com/2012/08/03/identify-storage-engine-and-formula-engine-bottlenecks-with-new-ssas-xevents-5/

You may also want to check out these (old, but still relevant) articles on performance tuning SSAS taken from the book I co-wrote with Marco and Alberto, “Expert Cube Development”:

http://www.packtpub.com/article/query-performance-tuning-microsoft-analysis-services-part1

http://www.packtpub.com/article/query-performance-tuning-microsoft-analysis-services-part2

What I want to concentrate on in this series of posts is how to make sense of this data using Power BI in general and Power Query in particular. The first step is to be able to load data from the .xel file using Power Query, and that’s what this post will cover. In the future I want to explore how to get at and use specific pieces of text data such as that given by the Query Subcube Verbose, Calculation Evaluation and Resource Usage events, and to show how this data can be used to solve difficult performance problems. I’m only going to talk about SSAS Multidimensional, but of course a lot of what I show will be applicable (or easily adapted to) Tabular; I guess you could also do something similar for SQL Server Extended Events too. I’m also going to focus on ad hoc analysis of this data, rather than building a more generic performance monitoring solution; the latter is a perfectly valid thing to want to build, but why build one yourself when companies like SQL Sentry have great tools for this purpose that you can buy off the shelf?

Anyway, let’s get on. Here’s a Power Query function that can be used to get data from one or more .xel files generated by SSAS:

(servername as text,

initialcatalog as text,

filename as text)

as table =>

let

//Query the xel data

Source = Sql.Database(servername,

initialcatalog,

[Query="SELECT

object_name, event_data, file_name

FROM sys.fn_xe_file_target_read_file ( '"

& filename & "', null, null, null )"]),

//Treat the contents of the event_data column

//as XML

ParseXML = Table.TransformColumns(Source,

{{"event_data", Xml.Tables}}),

//Expand that column

Expandevent_data = Table.ExpandTableColumn(ParseXML,

"event_data",

{"Attribute:timestamp", "data"},

{"event_data.Attribute:timestamp",

"event_data.data"}),

//A function to tranpose the data held in the

//eventdata.data column

GetAttributeData = (AttributeTable as table) as table =>

let

RemoveTextColumn = Table.RemoveColumns(AttributeTable,

{"text"}),

SetTypes = Table.TransformColumnTypes(RemoveTextColumn ,

{{"value", type text}, {"Attribute:name", type text}}),

TransposeTable = Table.Transpose(SetTypes),

ReverseRows = Table.ReverseRows(TransposeTable),

PromoteHeaders = Table.PromoteHeaders(ReverseRows)

in

PromoteHeaders,

//Use the function above

ParseAttributeData = Table.TransformColumns(Expandevent_data,

{"event_data.data", GetAttributeData})

in

ParseAttributeData

This function can be thought of as the starting point for everything else: it allows you to load the raw data necessary for any SSAS performance tuning work. Its output can then, in turn, be filtered and transformed to solve particular problems.

The function takes three parameters:

- The name of a SQL Server relational database instance – this is because I’m using sys.fn_exe_file_target_read_file to actually read the data from the .xel file. I guess I could try to parse the binary data in the .xel file, but why make things difficult?

- The name of a database on that SQL Server instance

- The file name (including the full path) or pattern for the .xel files

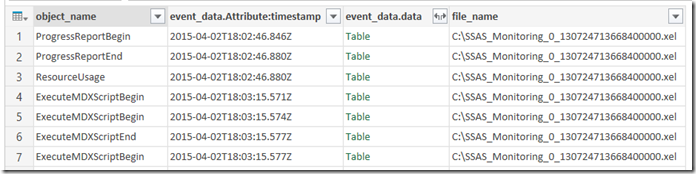

The only other thing to mention here is that the event_data column contains XML data, which of course Power Query can handle quite nicely, but even then the data in the XML needs to be cleaned and transposed before you can get a useful table of data. The GetAttributeData function in the code above does this cleaning and transposing but, when invoked, the function still returns an unexpanded column called event_data.data as seen in the following screenshot:

There are two reasons why the function does not expand this column for you:

- You probably don’t want to see every column returned by every event

- Expanding all the columns in a nested table, when you don’t know what the names of these columns are, is not trivial (although this post shows how to do it)

Here’s an example of how the function can be used:

let

//Invoke the GetXelData function

Source = GetXelData(

"localhost",

"adventure works dW",

"C:\SSAS_Monitoring*.xel"),

//Only return Query End events

#"Filtered Rows" = Table.SelectRows(Source,

each ([object_name] = "QueryEnd")),

//Expand Duration and TextData columns

#"Expand event_data.data" = Table.ExpandTableColumn(

#"Filtered Rows", "event_data.data",

{"Duration", "TextData"},

{"event_data.data.Duration",

"event_data.data.TextData"}),

//Set some data types

#"Changed Type" = Table.TransformColumnTypes(

#"Expand event_data.data",

{{"event_data.Attribute:timestamp", type datetime},

{"event_data.data.Duration", Int64.Type}}),

//Sort by timestamp

#"Sorted Rows" = Table.Sort(#"Changed Type",

{{"event_data.Attribute:timestamp", Order.Ascending}}),

//Add an index column to identify each query

#"Added Index" = Table.AddIndexColumn(#"Sorted Rows", "Query Number", 1, 1),

//Remove unwanted columns

#"Removed Columns" = Table.RemoveColumns(#"Added Index",

{"object_name", "file_name"})

in

#"Removed Columns"

All that’s happening here is that the function is being called in the first step, Source, and then I’m filtering by the Query End event, expanding some of the columns in event_data.data and setting column data types. You won’t need to copy all this code yourself though – you just need to invoke the function and then expand the event_data.data column to reveal whatever columns you are interested in. When you run a query that calls this function for the first time, you may need to give Power Query permission to connect to SQL Server and also to run a native database query.

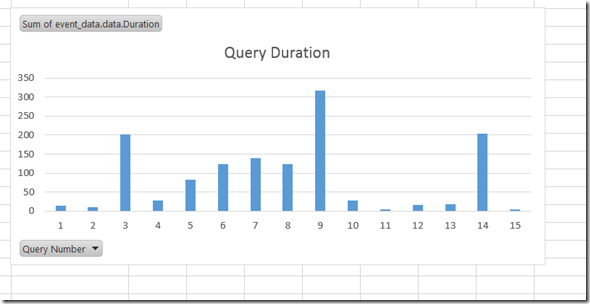

Here’s an example PivotChart showing query durations built from this data after it has been loaded to the Excel Data Model:

Not very useful, for sure, but in the next post you’ll see a more practical use for this function.

You can download the sample workbook for this post here.